Earth Notes: On Website Technicals (2025-10)

Updated 2025-11-13.: Yearly CO2 to SVG

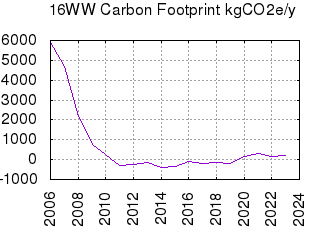

Given success with using SVG to replace PNG for simple charts, I have added SVG (and SVG pre-compressed with brotli) outputs from gnuplot for the yearly carbon footprint chart.

At the moment I am not running svgo on the server. If I were, the .svg would be about 3x smaller and the .svgbr about 2x smaller. However GZIP and brotli compressed versions are still smaller than .png and .webp.

3343 out/yearly/16WWYearlyEnergyCarbonFootprint.png

2560 out/yearly/16WWYearlyEnergyCarbonFootprint.png.webp

21693 out/yearly/16WWYearlyEnergyCarbonFootprint.svg

1551 out/yearly/16WWYearlyEnergyCarbonFootprint.svgbr

% gzip -6 < out/yearly/16WWYearlyEnergyCarbonFootprint.svg | wc -c

1958

The svgo thing will be fixed, amongst others, when I upgrade the server...

Oh, and Google's search AI Mode lied to me (again) that there is a precision n option for the gnuplot set terminal svg command to help reduce SVG file output size!

: From lockfile to flock

I have tentatively migrated a couple of build recipes in the main EOU makefile from locking with lockfile (which comes from the procmail package) to flock already present on Linux and macOS.

Not only does this avoid a spurious package dependency, but also the flock scheme should be more robust for the non-networked filesystems EOU is built in.

A downside is that a lock file will need to be kept around for each target, albeit zero-length, though fewer file creations/deletions will be needed.

: Monthly Carbon

I am working on extending my current full-year grid electricity CO2 emissions to compute month-by-month and maybe even current month and current day results.

First up a new generic script to bucket FUELINST-based carbon-intensity into 1h blocks. This script was simply extracted from a larger existing one.

% gzip -d < data/FUELINST/log/202509.log.gz | sh script/analytic/FUELINST-bucket-1h-inner.sh | head 2025-09-01T00:00Z,85.6667 2025-09-01T01:00Z,83.6667 2025-09-01T02:00Z,86.5 2025-09-01T03:00Z,89.6667 2025-09-01T04:00Z,94.6667 2025-09-01T05:00Z,90.3333 2025-09-01T06:00Z,92.6667 2025-09-01T07:00Z,90.8333 2025-09-01T08:00Z,78.1429 2025-09-01T09:00Z,73.5 % gzip -d < data/FUELINST/log/202509.log.gz | sh script/analytic/FUELINST-bucket-1h-inner.sh | tail 2025-09-30T14:00Z,224.5 2025-09-30T15:00Z,235.5 2025-09-30T16:00Z,246.833 2025-09-30T17:00Z,246 2025-09-30T18:00Z,238.5 2025-09-30T19:00Z,234 2025-09-30T20:00Z,217.167 2025-09-30T21:00Z,200 2025-09-30T22:00Z,158.75 2025-09-30T23:00Z,153.636 % gzip -d < data/16WWHiRes/Enphase/adhoc/net_energy_202509.csv.gz | sh script/analytic/Enphase-bucket-1h-inner.sh | tail 2025-09-30T13:00Z,-229 2025-09-30T14:00Z,-216 2025-09-30T15:00Z,-804 2025-09-30T16:00Z,351 2025-09-30T17:00Z,10 2025-09-30T18:00Z,47 2025-09-30T19:00Z,52 2025-09-30T20:00Z,43 2025-09-30T21:00Z,11 2025-09-30T22:00Z,10

: Intensity Calculation Broken

Oh dear: Latest data is from Tue Oct 21 13:10:00 UTC 2025.

The intensity seems to be generating a bogus 'from' request time, and when I move the old long store away to let it start again, some internal errors that look like Java optimisation errors: java.lang.NullPointerException: Cannot read field "a" because "<local32>" is null.

And of course it works on my development laptop!

Investigating:

- Fixed a coding bug that caused a crash if the 7D summary could not be constructed (released

reutils V1.4.2with fix). - Currently it looks like DNS; lookup of

data.elexon.co.ukis failing.

Restarted BIND on the server and all seems well again.

The Delphic errors were due to Java minification with ProGuard, shrinking a field name and eliminating a local variable name. This minification roughly halves JAR size, so is worth occasional puzzlement!

: Inline SVG Micro-optimisation

To do... When inlining small SVG images in HTML with data URLs, URL encode rather than base64, since the former will typically compress much better. Preferably have the %xx hex digits be lower case, since the majority of the surrounding text will be.

No, using jq to URL encode was not on my bingo card either... Note that jq outputs upper case hex digits, so is not ideal.

% cat img/LIVE-20w.svg | wc -c

208

% cat img/LIVE-20w.svg | gzip -9 | wc -c

174

% cat img/LIVE-20w.svg | brotli | wc -c

142

% cat img/LIVE-20w.svg | base64 | wc -c

281

% cat img/LIVE-20w.svg | jq -sRr @uri | wc -c

349

% cat img/LIVE-20w.svg | base64 | gzip -9 | wc -c

247

% cat img/LIVE-20w.svg | jq -sRr @uri | gzip -9 | wc -c

210

% cat img/LIVE-20w.svg | base64 | brotli | wc -c

235

% cat img/LIVE-20w.svg | jq -sRr @uri | brotli | wc -c

182

This became obvious when looking at the EOU homepage compressed in the flateview ZIP visualiser.

flateview of part of the EOU home page ZIPped with start of base64 encoded inline SVG visible, mainly in purple because this encoding makes it especially hard to compress.

This also revealed that I have been using an uppercase letter in the ⭐ Unicode expansion of the 'star' marker that I inject for popular pages. Now adjusted to ⭐ for better compression.

Also I now only put a "Count" after "References" when > 1, removing that paragraph from ~40 desktop pages.

: hand-rolled URL encoder

I have now written a script script/urlencode.sh to URL encode stdin to stdout with lowercase escape hex.

% cat img/LIVE-20w.svg | sh script/urlencode.sh | wc -c

348

% cat img/LIVE-20w.svg | sh script/urlencode.sh | gzip -9 | wc -c

202

% cat img/LIVE-20w.svg | sh script/urlencode.sh | brotli | wc -c

176

This avoids adding a trailing newline. A few bytes are saved in compression stand-alone with this encoding style: 8 with gzip -9 and 6 with brotli.

I then let svgo have a go at the SVG source, and it managed to strip out 3 bytes (though may have changed behaviour), with the result still working in my browser!

% ls -alS img/LIVE-20w.* 205 img/LIVE-20w.svg 169 img/LIVE-20w.svggz 136 img/LIVE-20w.svgbr % cat img/LIVE-20w.svg | sh script/urlencode.sh | brotli | wc -c 170

But maybe I need URL-encode only the # character?

: first pass SVG inline URL encode

A first pass change has been made to inline SVG URL-encoded. Strict URL encoding is being used at this point. Much of the purple has already gone for the flateview of the inlined image.

That this micro-optimisation currently only affects about six (desktop) pages, all for the same 'LIVE' SVG beacon image!

A second pass script script/urlencode-dataURL.sh allows :/@ characters to remain unescaped, and was tested against Lynx, Firefox, Chrome, Safari, and the W3C HTML validator.

% cat img/LIVE-20w.svg | sh script/urlencode.sh | wc -c

343

% cat img/LIVE-20w.svg | sh script/urlencode-dataURL.sh .sh | wc -c

319

% cat img/LIVE-20w.svg | sh script/urlencode.sh | brotli | wc -c

170

% cat img/LIVE-20w.svg | sh script/urlencode-dataURL.sh .sh | brotli | wc -c

166

This relaxed encoding saves 24 bytes (~7%) uncompressed, and 4 bytes (~2%) after brotli compression.

flateview of part of the EOU home page ZIPped with start of relaxed URL-encoded inline SVG visible, less purple because this encoding makes it easier compress in context.

A few more bytes are saved by dropping the redundant class=raw attribute from such images, since I do not style raw on EOU. That affects ~7 (desktop) pages.

I fixed svgo's breakage of my animation, adding back a few bytes! I also expanded the set of unencoded characters to /:@,.

% cat img/LIVE-20w.svg | wc -c

208

% cat img/LIVE-20w.svg | sh script/urlencode-dataURL.sh | wc -c

322

% cat img/LIVE-20w.svg | sh script/urlencode-dataURL.sh | brotli | wc -c

169

The current data URI for the img src attribute is:

data:image/svg+xml,%3csvg%20xmlns%3d%22http://www.w3.org/2000/svg%22%20width%3d%2220%22%20height%3d%2220%22%3e%3cstyle%3e@keyframes%20c%7b0%25,to%7bfill:red%7d50%25%7bfill:%23fcc%7d%7d%3c/style%3e%3ccircle%20cx%3d%2210%22%20cy%3d%2210%22%20r%3d%2210%22%20style%3d%22animation:c%2010s%20linear%200s%2020%22%20fill%3d%22%23fcc%22/%3e%3c/svg%3e

To save a few more bytes for inlined images, since height and width are omitted for them, decoding=async is no longer inserted (nor loading=lazy) to try to provide the browser access to the image dimensions ASAP and thus reduce the risk of content movement.

To find any cached inlined headers with decoding=async, to remove them and force rebuilds:

% find img/a/h -type f -exec egrep -l 'data:image' {} +

AI code assist

I asked for AI (Google's search AI Mode) help with the encoder script, because it is hard to do with sed and awk which are my usual tools, because of gaps in their functionality, at least portably including the Mac. The AI solution hallucinated some gawk functionality, for the critical hard-to-do part. I have now retained a part that the AI got tidily right, and solved the hard bit with normal Olde Worlde search results.

: ORCID Micro-optimisation

I have taken a few bytes out of the ORCID byline link on 'research' articles. I shortened the image path (with an alias image, ORCID.png). I also shortened the alt text to just "ORCID", thus no longer requiring quotes either. I changed the link URL to "ORCID.org" to help compression, with all mentions now being in the same case.

: Continuing RSS Nonsense

# Greedy podcast feed pullers: keys are MD5 hashed User-Agent.

# A non-empty txt map lookup of the %{md5:%{HTTP:User-Agent}} means bad!

# Built: 2025-10-17T11:36+00:00Z

# MAXHITSPERUAPERDAY: 7

# MAXUAS: 25

#----------------

# request-count User-Agent

# MD5hash approx-hits-per-day

#----------------

# 475 iTMS

97f76eb7e02c5ff923e1198ff1c288cd 475

# 304 Podbean/FeedUpdat

54e0e9df937b06cc83fab29f44c02b7f 304

# 203 Spotify/1.0

4582d9bdbcef42af27d89da91c6eb804 203

# 138 Google-Podcast

8dea568b39db0451edd6b30f29238eaf 138

# 98 Gofeed/1.0

4a9d728c458902d6ff716779ff72841d 98

# 73 -

d41d8cd98f00b204e9800998ecf8427e 73

# 34 axios/1.11.0

5c8ae194a6f98a725c992886f3da6e04 34

# 32 MuckRackFeedParse

62b46fff1cf5f8af7b4b37a2f783b57a 32

# 26 Amazon Music Podc

d69be2563c9f1929edf2906d41809aea 26

# 21 itms

2e7f714a929b3f52f3c094710819a99a 21

# 15 deezer/curl-3.0

b99188f8b12adffe0f92ae9c03f03c7c 15

# 13 PocketCasts/1.0 (

5caee5a0a53fcbcae25244d8770516ed 13

# 12 axios/1.6.8

b534882134248c9a5957e0c011a37037 12

# 11 TPA/1.0.0

86e71fcf5ba78d28f18270f7f83256bb 11

# 11 Mozilla/5.0 (Wind

6b9a00393fb1607b0ada13520f814ab5 11

# 11 FeedMaster/4.1

dca30fcc6f45af71c2825280bebba04d 11

# 10 Mozilla/5.0 (Maci

209eaa299125147cabf09b35d947b794 10

# 8 Overcast/1.0 Podc

c8bf931c39e0b216181afc441001e58b 8

# 8 AntennaPod/3.9.0

3975d4c899c67ddd6e9fa312444f71a8 8

# 7 PodchaserParser/2

599ce17a1a1a11800a9905e39fa49f10 7

I will put the threshold up to ~10, to avoid trapping relatively well-behaved clients, such as AntennaPod, with multiple distinct users.

: Dashboard Micro-optimisation

My small static dashboard HTML page, which uses meta http-equiv=refresh content=XXX to auto-update, is small, but not fully minified, because it is maintained by hand.

% ls -alS _dashboard.html* 3295 Oct 26 2024 _dashboard.html 1175 Oct 26 2024 _dashboard.htmlgz 889 Oct 26 2024 _dashboard.htmlbr

It occurs to me that that I could at least run it through the 'gentle' minimisation level to scrape a few more bytes. (Though this is a really tiny amount of network traffic anyway!) This changes a couple of lines of the source. (I also updated the copyright to .) I then have to force regeneration of the pre-compressed versions.

3254 Oct 14 11:17 _dashboard.html 1155 Oct 14 11:17 _dashboard.htmlgz 890 Oct 14 11:17 _dashboard.htmlbr

Oh look, the br is actually 1 byte larger!

So I tweaked some metadata, including removing some Twitter-only stuff:

3117 Oct 14 11:27 _dashboard.html 1122 Oct 14 11:27 _dashboard.htmlgz 867 Oct 14 11:27 _dashboard.htmlbr

I finally ran the updated page through the W3C Markup Validation Service to make sure that I had not broken anything!

: ISSN Lookup from Bibliography

I have added automatic linking from ISSNs in bibliography entries via The ISSN Portal.

Something similar could be done with ISBNs.

: Allowing Ads on Lite/Mobile Site

Ads have generally not been allowed on the m-dot / mobile / lite site since those users' bandwidth may be most precious.

However, I want to probe if there is significant potential revenue there, for I have added a 'soft' configuration parameter to allow me to turn this stuff on and off more easily.

As ever, this took a couple of rounds to get right. Having created a soft parameter to allow ad injection on 'lite' pages, I then also had to enable computation of page popularity rank for 'lite' pages since unpopular pages generally do not get to show ads anyway!

: Podcast Episode RSS Description More Verbose

Some (podcast) RSS users want all the text of all the episodes in the RSS feed. I do not want to do that since:

- Detailed HTML styling and other control (eg for efficiency) does not seem possible.

- It is a terrible redundant waste of bandwidth for a full archive feed, with every reader having to pay over and over in full for items of no interest or already read.

However, what I have now done, where safe (no lurking HTML tags nor entities), I append the pgintro paragraph to the RSS description for new-ish episodes (up to ~90 days old).

Feed bloat is capped, but readers get to see a little more to reel them in...

: AdSense Fail

Google keeps claiming that I do not publish the ads.txt for my hd.org zone/domain, though it never moved or went away. So today rather than yet again manually convince Google to actually look at the URL unchanged for years, I removed all ads for the zone and dropped authorisation in AdSense. Google's (tiny) loss I think, if it cannot get some basics right.

: Sitebulb

I gave Sitebulb 8.10 a whirl and found/fixed a few minor errors such as subtle broken internal links.

But Sitebulb claimed that the majority of my HTML pages are so badly broken that browsers may not be even able to display them. Those pages pass validation with the W3C validator and others, and work fine in Firefox, Chrome, Safari and Lynx... (I suspect I know exactly why, because of a valid minification that I use.)

So that suggests to me that the tool will not be much use to me for now.